This technical blog is my own collection of notes , articles , implementations and interpretation of referred topics in coding, programming, data analytics , data science , data warehousing , Cloud Applications and Artificial Intelligence . Feel free to explore my blog and articles for reference and downloads . Do subscribe , like , share and comment ---- Vivek Dash

Thursday, May 6, 2021

Tuesday, May 4, 2021

Notes on Linear Regression with one variable , Cost Function , Objective determination of a regression function , Interpretation and Scenario example

Monday, May 3, 2021

Linear Regression in One Variable ( Uni-variate ) expression with small short examples - short hand-written summary notes

Notes on Supervised Machine Learning - Handwritten infographic short-points and scenarios

Monday, April 26, 2021

The Various Categories of Machine Learning Algorithms with their Interpretational learnings

Machine Learning has the three different flavours depending on the algorithm and their objectives they serve . One can divide machine learning algorithms into three main groups based on the purpose :

01) Supervised Learning

02) Unsupervised Learning

03) Re-inforcement Learning

Now in this article we will learn more on each of the learning techniques in greater detail .

==================================

01) Supervised Learning

==================================

* Supervised Learning occurs when an algorithm learns from a given form of example data and associated target responses that consist of numeric values or string labels such as classes or tags , which can help in later prediction of correct responses when one is encountered with newer examples

* The supervised learning approach is similar to human learning under the guidance and mentorship of a teacher . This guided teaching and learning of a student under the aegis of a teacher is the basis for Supervised Learning

* In this process , a teacher provides good examples for the student to memorize and understand and then the student derives general rules from the specific examples

* One can distinguish between regression problems whose target is a numeric value and along with that one can make use of such regression problems whose target is a qualitative variable which is an indicator of a class or a tag as in the case of a selection criteria

* More on Supervised Learning Algorithms with examples would be discussed in later articles .

==================================

02) Unsupervisd Learning

==================================

* Unsupervised Learning occurs when an algorithm learns from plain examples without any associated response in the target variable , leaving it to the algorithm to determine the data patterns on their own

* This type of algorithm tends to restructure the data into something else , such as new features that may represent a class or a new series of uncorrelated values

* What is Unsupervised Learning ? It is a type of learning which tends to restructure the data into some new set of features which may represent a new class or a series of uncorrelated values within a data set

* Unsupervised Learning algorithms are quite useful in providing humans with insights into the meaning of the data as there are patterns which need to be found out

* Unsupervised Learning is quite useful in providing humans with insights into the meaning of the data and new useful inputs to supervised machine learning algorithms

* As a new kind of learning , Unsupervised Learning resembles the methods that humans use to figure out that certain objects or events are of the same class or characteristic or not , by observing the degree of similarity of the given objects

* Some of the recommendation systems that one may have come across over several retail websites or applications are in the form of marketing automation which are based on the type of learning

* The marketing automation algorithm derives its suggestions from what one has done in the past

* The recommendations are based on an estimation of what group of customers that one resembles the most and then inferring one's likely preferences based on that group

==================================

02) Reinforcement Learning

==================================

* Reinforcement Learning occurs when one would present the algorithm with examples that lack any form of labels as in the case of unsupervised learning .

* However , one can provide an example with some positive and negative feedback according to the solution of the algorithm proposed

* Reinforcement Learning is connected to the applications for which the algorithm must make decisions ( so the product is mostly prescriptive and not just descriptive as in the case of unsupervised learning ) and on top of that the decisions bear some consequences .

* In the human world , Reinforcement learning is mostly a process of learning by the application of trial and error method to the process of learning

* In this type of learning , initial errors and aftermath errors help a reader to learn because this type of learning is associated with a penalty and reward system which gets added each time whenever the following factors like cost , loss of time , regret , pain and so on get associated with the results that come in the form of output for any particular model upon which the set of reinforcement learning algorithms are applied

* One of the most interesting examples on reinforcement learning occurs when computers learn to play video games by themselves and then scaling up the ladders of various levels within the game on their own just by learning on their own the mechanism and the procedure to get through each of the level .

* The application lets the algorithm know the outcome of what sort of action would result in what type of result .

* One can come across a typical examplle of the implementation of a Reinforcement Learning program developed by Google's Deep Mind porgram which plays old Atari's videogames in a solo mode at https://www.youtube.com/watch?v=VieYniJORnk

* From the video , one can notice that the program is initially clumsy and unskilled but it steadily improves with better continuous training until the program becomes a champion at performance of the task

Descending the Right Curve in Machine Learning - A relation to science fiction and science in practice

* Machine Learning may appear as a magic trick to any newcomer to the discipline - something to expect from any application of advanced scientific discovery , as similar as Arthur C Clarke , the futurist and author of popular science fiction stories like 2001: A Space Odyssey. This sentence suggests that ML is largely a construct of so many things combined which has the ability to deem itself incomprehensible by the sheer magnitude of the level of machinery and engineering involved which could help a general user to ascertain models and predictions based on the patterns identified from a particular dataset

* Supporting his theory of Machine Learning , Mr Arthur C Clarke had stated in his third law stating that "any sufficiently advanced technology is indistinguishable from magic" which appeals to a common user that the when it comes to user level perception of any sufficiently high level technology , then to a common user the technology seems some form of magic . Since in magic , the trick is to carry off a spectacle without letting the viewer of the trick to get to know the underlying working principle involved in the magic

* Though it is greatly believed that Machine Learning's underlying strength is some form of imperceptible mathematical , statistical and coding based magic , however , this is not a form of magic but rather one needs to understand the underlying foundational concepts from the scratch so that so of the more complex working mechanism could be understood . Therefore , it is said that machine learning is is the application of mathematical formulations to have a r great learning experience

* Expecting that the world itself is a representation of mathematical and statistical formulations , machine learning algorithms strive to learn about such formulations by tracking them back from a limited number of observations .

* Just as humans have the power of distinction and perception , and can recognise what is a ball and which one is a tree , machine learning algorithms can also use the computational power of the computers to deploy the widely available data on all the subjects and domains , human beings can use the computational power of computers and leverage their wide availability to learn how to solve a large number of important and useful problems

* It is being said that though Machine Learning is a complex subject , humans devised this and in its initial inception , Machine Learning started mimicking the way in which one can learn from the surrounding world . One can also on top of that express simple data problems and basic learning algorithms based on how a child would perceive and understand the problems of the world or to solve a challenging learning problem by using the analogy of descending from the top of the mountains by taking the right slope of descent .

* Now with a somewhat better understanding of the capabilities of machine learning and how they can help in the direction of solving a problem , one can now start to learn the more complex facets of the technology in greaer detail with more examples of their proper usages .

Friday, April 23, 2021

Describing the use of Statistics in Machine Learning - A full detailed article on some of the most important concepts in Statistics

Describing the use of Statistics

* Its important to skim through some of the basic statistical concepts related to probability and statistics . Along with that , we will also try to understand how these concepts can help someone to describe the information used by machine learning algorithms

* The zist of all the learning of these concepts is not only

about how to describe an event by counting the number of occurrences , but its

about describing an event without counting every time how many times a

particular event occurs .

* If there are some imprecision in a recording instrument that one uses , or simply because some error in the the recording procedure of a machine occurs , rather an imprecision occurs in the instrument that one uses or simply because of any random nuisance which disturbs the process of recording a given measure during the process of recording the measure occurs ... then a simple measure such as weight , will differ every time one would get a scale which would be slightly oscillating around the true weights and minimal variation scale . Now , if someone wants to perform such a small incident in a city and want to measure the weight of all the people in the city , then it is probably an impossible experiment to be conducted on such a large scale as it would involve taking the weight-wise reading of all the people in the city which is something that is practically not possible , because first of all if someone wants to perform this experiment in one go , then one has to create a big big gigantic weighing scale to mount all the people of the city in its weighing pans , which is completely an impossible task , and probably the scale may break once all the people have been mounted to the pan or otherwise the worst thing that may happen is that once all the people's weights have been measured , the experiment could render itself insignificant as the experiment once conducted would make the use of the weighing machine useless and hence the cost associated with building of such a big machine for carrying out just one task would become meaningless .

* So , the purpose of experiment might get achieved , but the cost of the built-up of the instrument would run so high that a big dent in the overall GDP of the city would get created which might cripple the city's finance and budget . On the other hand , if we take the measurement of the entire city's weight recording each person's weight one by one , then the effort and time taken for the entire activity to be completed might take some weeks or months of time . Because of the high amount of time and effort that would get consumed while managing the entire ruckus won’t suit the idea for adaptability and taking up the idea. And even if all the weights of the people residing in the city is successfully measured, there are a lot of chances that anyhow some amount for error would definitely popup making the idea of the entire process not so fruitful and fault-proof

* Having partial information is a quite complex process which is not a completely negative condition because one can use such smaller matrices for the purpose of efficient and less cumbersome calculations. Also on top of that, it is said that one cannot get a sample of what one wants to describe and learn because the event's complexity might be quite high and may probably feature a great variety of features . Another example that some users could consider while taking a case of a sample or a large case of data , is a case of Twitter tweets . All the tweets may be considered as some sample of data over where the same data could be treated as some experimental potions and minerals which are processed using several word processors , sentiment analyzers , business enhancers , spam , abusive data and all depending upon the sample of data associated with each of the text within the short frame of data that one can provide within the text section .

* Therefore it is also a good practice in sampling to sample similar data which has associated characteristics and features which will present the sample data in the form of a grouped cohesive data which fit into a proper sampling criteria. And when sampling is done carefully, one can get an idea that one can obtain a better global view of the data from the constituent samples

* In Statistics , a population refers to all the events and objects that one wants to measure and is a part of the given criteria which gives in detail the account of metrices of the population . Using the concept of random sampling , which is picking the events or objects one needs to choose one's examples according to the criteria which would determine how the data is collected ,assembled and synthesised. This is then used for feeding into machine learning algorithms which apply their inherent functions for determination of patterns and behaviour.

* Along with such determination , a probabilistic model of input data is built which is used for prediction of similar patterns from any newly input data or datasets ,Application of this concept of data generation from population's subsamples and mapping the identified patterns to map new use cases is one of subsamples the chief objectives of machine learning on the back of supported algorithms

* "Random Sampling" -- It is not the only approach for any sort of sampling . One can also apply an approach of "stratified sampling" through which one can control some aspects of the random sample in order to avoid picking too many or too few events of a certain kind .After all , it is said that a random sample is a random sample , the manner it gets picked is irrespective of the manner in which all samples would criterion themselves for picking up a sample , and there is no absolute assurance of always replicating an exact distribution of a population .

* A distribution is a statistical formulation which describes how to observe any event or a measure by ideating the probability of witnessing a certain value . Distributions are described in Mathematical formula and can be graphically described using charts such as histograms or distribution plots . The information that one wants to put over the matrix has a distribution , and one may find that the distributions of different features are related to each other . A normal distribution naturally implies variation and when dealing with numeric values , it is very important to figure out a center of variation which is essentially a value which corresponds to the statistical mean which can be calculated by summing all the values and then dividing the sum by the total number of values considered .

* Mean - This is specifically a descriptive measure which tells the users the values to expect the most from within dataset . as it is a general fact that most of the times , one can observe that the mean of a dataset is that data which generally hovers around a given data group or the entire dataset . The Mean of a dataset is the best suited data for any symmetrical and bell-shaped distribution . In cases , when the value is above the mean of the entire dataset , the distribution is similarly shaped for the values that lie below the mean . The normal distribution or the Gaussian distribution is shaped around the mean which one can find only when one is dealing with legible data which is not much skewed in any direction from the equally shaped domes of the normal distribution curve . In the real world , in most of the datasets one can find many skewed distributions that have extreme values on one side of the distribution , which influences the value of mean so much

* Median - The Median is a measure that takes the value in the

middle after one orders all the observations from smallest to the largest

values within the dataset . Based on the value order, the median is a less

approximate measure of central approximation of data .

* Variance - The significance of mean and median data descriptors is that they describe a value within a data description around which there is some form of variation . In general, the significance of the mean and median descriptors is variation. In general , the significance of the mean and median descriptors is that they describe a value within the distribution around which there is a variation and machine learning algorithms generally do not care about such a form of variation . Most people generally refer to the term , variation as "variance" . And since , variance is a squared number there is also a root equivalent which is termed as "Standard Deviation" . Machine Learning takes into account the concept of variance in every single variable (univariate distributions) and in all the features together (multivariate distribution) to determine how such a variation impacts the response obtained .

* Statistics is an important matter in machine learning because it conveys the idea that features have a distribution pattern . Distribution of data implies variation and variation means quantification of information ... which means that more amount of variance is present in the features , then the more amount of Information can be matched to the response .

* One can use statistics to assess the quality of the feature

matrix and then leverage statistical measures in order to draw a rule from the

types of information to their purposes that they cater to .

Wednesday, April 21, 2021

An article on - Bayes Theorem application and usage

Bayes

Theorem application and usage

Instance and example of usage of

Bayes Theorem in Maths and Statistics :

P(B|E)

= P(E|B)*P(B) / P(E)

If one reads the formula , then

one will come across the following terms within the instance which can be elaborated

with the help of an instance in the following manner :

*

P( B | E ) - The probability of a belief(B) given a set of evidence(E) is

called over here as Posterior Probability . Here , this statement tries to

convey the underlying first condition that would be evaluated for going forth

over to the next condition for sequential execution . In the given case , the

hypothesis that is presented to the reader is whether a person is a female and

given the length of her hair is sufficiently long , the subject in concern must

be a girl

*

P( E | B ) - In this conditional form of probability expression , it is

expressed that one could be a female given the condition that the subject has

sufficiently long hair . In this case , the equation translates to a form of

conditional probability .

* P

( B ) -

Here , the case B stands for the general probaility of being a female with a

priori probability of the belief . In the given case , the probability is

around 50 percent which could be also translated to a likelihood of occurrence

of around 0.5 likelihood

*

P(E) -

This is the case of calculating the general probability of having long hair .

As per general belief , in a conditional probability equation this term should

be also treated as a case of priori probability which means the value for its

probability estimate is available well in advance and therefore , the value is pivotal

for formulation of the posterior probability

If one would be able to solve

the previous problem using the Bayes Formula , then all the constituent values

would be put in the given equation which would fill in the given values of the

equation .

The same type of analogy is also

required for estimation of a certain disease among a certain set of population

where one would very likely take to calculate the presence of any particular

disease within a given population . For this one needs to undergo a certain

type of test which would result in producing a viable or a positive result .

Generally , it is perceived that

most of the medical tests are not completely accurate and the laboratory would

tell for the presence of a certain malignancy within a test which would convey

a condensed result about the condition of within a test which would convey a

condensed result about the condition of illness of the concerned case .

For the case , when one would

like to see the number of people showing a positive response from a test is as

follows :

1)

Case -1 :

Who is ill and who gets the correct answer from the test .

This is normally used for the case

of estimation of true positives which amounts to 99 percent of the 1 percent of the population

who get the illness

2)

Case-2 :

Who is not ill and who gets the wrong diagnosis result from the test . This

group consists of 1 percent of the 99 percent of the population who would get a

positive response , even though the illness hasn't been completely discovered

or ascertained in the given cases . Again , this is a multiplication of 99

percent and 1 percent ; this group would correspond to the discovery of false

positive cases among the given sample . In simple words , this category of grouping

takes into its ambit , those patients who are actually not ill (may be fit and

fine ) , but due to some aberrations or mistakes in the report which might be

under the case of mis-diagnosis of a patient that , the patient is discovered

as a ill person . Under such

circumstances, untoward cases of administration of wrong medicines might happen

, which rather than curing the person of the given illness might inflict aggravations

over the person rendering him more vulnerable to hazards , catastrophies and

probably untimely death

* So going through the given cases of estimation of correct cases of Classification for a certain disease or illness could help in proper medicine administration which could help in recovery of the patient owing to right Classification of the case ; and if not then the patient would be wrongly classified in a wrong category and probably wrong medicines could get administered to the patient seeking medical assistance for his illness .

( I hope , there is some

understanding clarity in the cases where the role of Bayesian Probability

estimations could be put to use . As mentioned , the usage of this algorithm

takes place in a wide-manner for the case of proper treatment and

classification of illnesses and patients ; classification of fraudulent cases

or credit card / debt card utilisation , productivity of employees at a given

organisation by the management after evaluation of certain metrices :P ...... I

shall try to extend the use case and applications of this theorem in later

blogs and articles )

Tuesday, April 20, 2021

Some Operating cases on Probabilities

Some Operating cases on Probabilities

* One must rely on some set or rules in order for the operation

to make sense to the user who is conducting the experiment on probabilities.

For example , if someone is conducting an experiment of tossing a coin then

he/she must strictly define the rules according to which the game of tossing a

coin would be played out . The instructor would declare which outcomes should

be taken as valid outcomes and which should not be taken in as valid outcomes ,

rather must be negated the moment the norms of the game are violated .

* Again another property of Probabilities that one needs to be aware is summations between probabilities which states that summations of probabilities is possible only when all the constituting events of the sample space are mutually exclusive to each other . For example lets consider an experiment of rolling a dice over a game of ludo , in this all the possible events that could turn up as a result of throw of the dice are 1 , 2 , 3 , 4 , 5 , 6 . The probability of occurrence of each of the events is 1/6 or 1 by 6 . And here , each of the events within the given sample space are disjoint and mutually exclusive to each other which makes the individual events probability of occurrence as equal to each of the given event divided by the total number of events over the entire sample space . And in case one would like to know the probability of occurrence of all the events together in unison , then one may have to add up the probabilities of each of the individual events as a summation of each of the individual events .. which would yield an output of 1 . So in retrospect, all individual elements of an experiment of probability are disjoint and mutually exclusive and in unison lead to a summed up value of 1 .

* We can take another simple example to demonstrate to demonstrate the case of understanding of probability calculation ; in this case one can consider for example the case of picking a spade or a diamond from a set of cards can be calculated in the following manner . Total number of cards in the entire deck = 52 . Number of cards in the house of clubs = 13 , number of cards in the house of clubs = 13 , number of cards in the house of hearts = 13 , number of cards in the house of diamonds = 13 . If a person takes out a card from the house of diamond then the probability if picking up one of the cards is 13/52 ; the same goes for the case of picking up a random card from a house of clubs is 13/52 . So , total probability of finding a card from both the houses is 26/52 which is equals to 0.5

* One can take the help of subtraction operation to determine the probability of some events where probability of an event is different from the probability of an event that one would want to compare . For instance , if someone wants to determine the probability of drawing a card that does not belong to some house of card for example , say I want to draw a card which is not a diamond from the overall deck of cards , then one will approach the problem in the given manner . He will first find out the overall probability of finding any card and then he will subtract the chance of occurrence of a particular card from the total , 1 - 0.25 which happens to be as 0.75. One could get a complement of the occurrence of the card in this manner , which could be used for finding the probability of not occurrence of a particular event .

* Multiplication of a set of events can be helpful for finding the intersection of a set of independent events . Independent Events are those which do not influence each other . For instance , if one is playing a game of dice and one would like to throw two dices together , then the probability of getting two sixes is 1/ 36 . This can be obtained by multiplication of dices over both the cards , where first the probability of obtaining a 6 is found out to be as 1/6 and then the subsequent independent event would also produce an probability of obtaining another 6 is found out to be as 1/6 , here both the values are multiplied with each other and found that product of both the probabilities of independent events would yield a value output as 1/36 or 0.28 .

* Using the concepts of summation , difference and multiplication , one can obtain the probability of most of the calculations which deal with events . For instance , if one would want to compare the probability of getting atleast a six from two throws of dice which is a summation of mutually exclusive events . Probability of obtaining two sixes of dice , p = 1/6* 1/6 = 1/36

* In a similar manner if one would like to calculate the probability

of having a six on the first dice and then something other than a six on the

second throw of the dice is p = (1/6)*(1- 1/6) = 5/36 ,

* Probability of getting a six from two thrown dice is p = 1/6*

1/6 +2*1/6*(1- 1/6) = 11/36

Monday, April 19, 2021

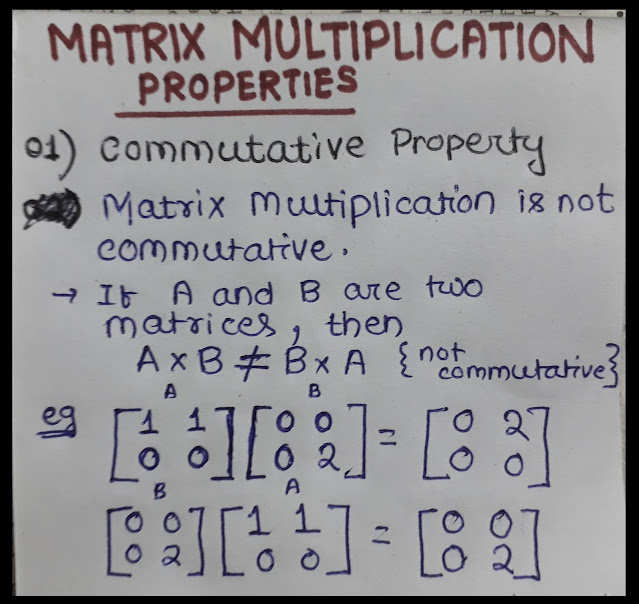

Advanced Matrix Operations – A theoretical view

Advanced Matrix Operations – A theoretical

view

========================================

* One may encounter some

important matrix operations using algorithmic formulations

* The advanced matrix operations

are formulating the transpose and inverse of any given matrix form of dataset

* Transposition occurs when a

matrix of shape n x m is transformed into a matrix in the form of m x n by

exchanging the rows with the columns

* Most of the tests indicate the

operation using the superscript T in the form of A( transpose )

* One can apply " matrix inversion " over

matrices of shape m x m , which are square matrices that have the same number

of rows and columns . In mathematical language , this form of square ordering

of matrices is said that the matrix has m rows and m columns .

* The above operation is

important for the sake of finding the immediate resolution of the various

equations which involve matrix multiplication such as y = bX where one has to discover the values in the vector b . More

on Matrix multiplications with more conceptual examples would be showcased in

another article in which I shall try to cover how the Matrix Multiplication of

different Matrices occur and how this Multiplication is used to solve more

important / complex problems .

* Since most scalar numbers

(exceptions including zero) have a number whose multiplication results in a

value of 1 , the idea is to find a matrix inverse whose multiplication would

result in a special matrix called the identity matrix whose elements are zero ,

except the diagonal elements

( the elements in positions where the index 1

is equal to the index j)

* Now , if one wants to find the

inverse of a scalar quantity , then one can do so by finding the inverse of a

scalar . (The scalar number n has an inverse value that is n to the power minus

1 which can be represented by 1/n that is 1 upon n )

* Sometimes, finding the inverse

of a matrix is impossible and hence the inverse of a matrix A is indicated as A

to the power minus 1

* When a matrix cannot be

inverted, it is referred to "singular matrix" or a "degenerate matrix" .

Singular matrices are usually not found in isolation, rather are quite rare to

occur and generalise .

Monday, April 12, 2021

Math behind Machine Learning - An introductory article on the usage of mathematics and statistics as the foundation of Machine Learning

Math

behind Machine Learning

* If one wants to implement existing machine learning algorithms

from scratch or if someone wants to devise newer machine learning algorithms ,

then one would require a profound knowledge of probability , linear algebra ,

linear programming and multivariable calculus

* Along with that one may also need to translate math into a

form of working code which means that one needs to have a good deal of

sophisticated computing skills

* This article is an introduction which would help someone in

understanding of the mechanics of machine learning and thereafter describe how

to translate math basics into usable code

* If one would like to apply the existing machine learning

knowledge for implementation of practical purposes and practical projects ,

then one can leverage the best of possibilities of machine learning over

datasets using R language and Python language's software libraries using some

basic knowledge of math , statistics and programming as Machine learning's core

foundation is built upon skills in all of these languages

* Some of the things that can be accomplished with a clearer

understanding and grasp over these languages is the following :

1) Performance of Machine Learning experiments using R and

Python language

2) Knowledge upon Vectors , Variables and Matrices

3) Usage of Descriptive Statistics techniques

4) Knowledge of statistical methods like Mean , Median , Mode ,

Standard Deviation and other important parameters for judging or evaluating a

model

5) Understanding the capabilities and methods in which Machine

Learning could be put to work which would help in making better predictions etc

Thursday, April 8, 2021

MapReduce Programming - An introductory article into the concept of MapReduce Programming

MapReduce Programming

Tuesday, March 30, 2021

Working with Data Objects in R

Working with Data Objects in R

* Data Objects are the fundamental items that one can work with

in R language

* Carrying out analysis on one's given data and making sense of the results are the most important reasons of using R . In this article methods of working over a given data set would be provided and along with that understanding the results associated with the data objects of R is the central idea for working over R

* One can learn to use the different forms of data that are associated with R and how to convert the data from one form to another form

* Over R , one would also learn the techniques of sorting and rearranging the data

* Some of the processes involved with Data Objects manipulation in R are in following manner :

==========================

Manipulating Data Objects in R

==========================

- When you collect the data the very forst step is to get the

data over to the R console

-

Write a program in Python to calculate the factorial value of a number When we talk about factorial of a number , then the factorial of tha...