This technical blog is my own collection of notes , articles , implementations and interpretation of referred topics in coding, programming, data analytics , data science , data warehousing , Cloud Applications and Artificial Intelligence . Feel free to explore my blog and articles for reference and downloads . Do subscribe , like , share and comment ---- Vivek Dash

Friday, May 7, 2021

Notes on UnSupervised Machine Learning Algorithm - Revision Short Notes on how it is done , its goals , application areas , categories

Thursday, May 6, 2021

Monday, April 26, 2021

Descending the Right Curve in Machine Learning - A relation to science fiction and science in practice

* Machine Learning may appear as a magic trick to any newcomer to the discipline - something to expect from any application of advanced scientific discovery , as similar as Arthur C Clarke , the futurist and author of popular science fiction stories like 2001: A Space Odyssey. This sentence suggests that ML is largely a construct of so many things combined which has the ability to deem itself incomprehensible by the sheer magnitude of the level of machinery and engineering involved which could help a general user to ascertain models and predictions based on the patterns identified from a particular dataset

* Supporting his theory of Machine Learning , Mr Arthur C Clarke had stated in his third law stating that "any sufficiently advanced technology is indistinguishable from magic" which appeals to a common user that the when it comes to user level perception of any sufficiently high level technology , then to a common user the technology seems some form of magic . Since in magic , the trick is to carry off a spectacle without letting the viewer of the trick to get to know the underlying working principle involved in the magic

* Though it is greatly believed that Machine Learning's underlying strength is some form of imperceptible mathematical , statistical and coding based magic , however , this is not a form of magic but rather one needs to understand the underlying foundational concepts from the scratch so that so of the more complex working mechanism could be understood . Therefore , it is said that machine learning is is the application of mathematical formulations to have a r great learning experience

* Expecting that the world itself is a representation of mathematical and statistical formulations , machine learning algorithms strive to learn about such formulations by tracking them back from a limited number of observations .

* Just as humans have the power of distinction and perception , and can recognise what is a ball and which one is a tree , machine learning algorithms can also use the computational power of the computers to deploy the widely available data on all the subjects and domains , human beings can use the computational power of computers and leverage their wide availability to learn how to solve a large number of important and useful problems

* It is being said that though Machine Learning is a complex subject , humans devised this and in its initial inception , Machine Learning started mimicking the way in which one can learn from the surrounding world . One can also on top of that express simple data problems and basic learning algorithms based on how a child would perceive and understand the problems of the world or to solve a challenging learning problem by using the analogy of descending from the top of the mountains by taking the right slope of descent .

* Now with a somewhat better understanding of the capabilities of machine learning and how they can help in the direction of solving a problem , one can now start to learn the more complex facets of the technology in greaer detail with more examples of their proper usages .

Wednesday, April 21, 2021

An article on - Conditioning Chance and Probability by Bayes Theorem

Conditioning Chance & Probability by Bayes Theorem

* Probability is one of the most key important factors that takes into effect the condition of time and space but there are other measures which go hand in hand with the measures that go into calculation of probability values and that is Conditional Probability which takes into effect the chance of occurrence of one particular event with effect to occurrence of some other events that may also affect the possibility and probability of the other event .

* When one would like to

estimate the probability of any given event , one may believe the probability

of some value to be applicable to some values which one may calculate upon a

set of possible events or situations . This term is used to express a belief of

"apriori probability"

which means general probability of any given event .

* For example , in the condition

of a throw of a coin ... if the coin thrown is a fair coin , then it could be

said that the apriori probability of occurrence of a head is around 50 percent

. This means that when someone would go for tossing a coin , he already knows

what is the probability of occurrence of a positive ( in other words .. desired

outcome ) otherwise occurrence of a negative outcome ( in other words ..

undesired outcome ) .

* Therefore , no matter how many

times one would toss a coin .. whenever faced with a new toss the probability

of occurrence of a heads is still 50 percent and the probability of occurrence

of a tail is still 50 percent .

* But consider a situation where

if someone wishes to change the context , then the subject of apriori probability is not valid

anymore .. because something subtle has happened and changed the outcome as we

all know there are some prerequisites and conditions that must satisfy so that

the general experiment could be carried out and come to fruitition. In such a

case , one can express the belief as a form of posteriori probability which is the priori probability after

something has happened that would tend to modify the count or outcome of the

event .

* For instance , gender estimation for a person being either a male or a female is the same which is about 50 percent in almost all of the cases . But this general assumption that any population taken into account would be having the same demography is wrong as I happened to come across my referenced article that what generally happens in a demographic population is that generally the women are the ones who tend to live longer and exceed their counterpart males in most of the cases in all of human existence .. as they are mostly the ones who tend to live longer and exceed their counterpart males in most of the factors that contribute to the general well being , and as a result of which the population demographic tilt is more towards the female gender .

Hence , putting all these factors into account

that contribute to the general estimate of any population , one should not

ideally take gender as a main parameter for determination of population data

because this factor is tilted in age-brackets and hence an overall idea for

generalisation of this factor should not be considered .

* Again , taking this factor of

gender into account , the posteriori probability is different from the expected apriori one which in this

example can consider gender to be the parameter for estimation of population

data and thus estimate somebody's probability of gender on the belief that

there are 50 percent males and 50 percent females in a given population data .

* One can view cases of conditional probability in the given manner P(y(x)) which in mathematical sense can be read as probability of the event y given the probability of occurrence of event x takes place . For the great relevance Conditional Probability plays in the concepts and studies of machine learning , learning and understanding the syntax of representation , expression and comprehension of the given equation is of great paramount importance to any newbie or virtuoso in the field of maths , statistics and machine learning . Hence , again if someone comes across a notation for conditional probability in the form P(y(x)) which can be read as the probability of event Y happening given X has already happened .

* As mentioned earlier in the

above paragraph , because of its dependence on possibility of occurrence on

single or multiple prior conditions , the role of conditional probability is of

paramount importance for machine learning which takes into effect statistical

conditions of occurrence of any event . If the apriori probability can change

because of circumstances, knowing the possible circumstances can give a big

push in one's chances of correctly predicting any event by observing the

underlying examples - exactly what machine learning generally intends to do .

* Generally , the possibility of finding a random person's gender as a male or female is around 50 percent . But , in case one would like to take into consideration the mortal aspects and age factor of any population , we have seen that the demographic tilt is more in favour of females . If under all such conditions , one would take into consideration the female population , and then dictate a machine learning algorithm to find out the gender of the considered person on the basis of their apriori conditions like length of hair , mortality rate etc , the ML algorithm would be able to very well determine the solicited answer

An article on - Bayes Theorem application and usage

Bayes

Theorem application and usage

Instance and example of usage of

Bayes Theorem in Maths and Statistics :

P(B|E)

= P(E|B)*P(B) / P(E)

If one reads the formula , then

one will come across the following terms within the instance which can be elaborated

with the help of an instance in the following manner :

*

P( B | E ) - The probability of a belief(B) given a set of evidence(E) is

called over here as Posterior Probability . Here , this statement tries to

convey the underlying first condition that would be evaluated for going forth

over to the next condition for sequential execution . In the given case , the

hypothesis that is presented to the reader is whether a person is a female and

given the length of her hair is sufficiently long , the subject in concern must

be a girl

*

P( E | B ) - In this conditional form of probability expression , it is

expressed that one could be a female given the condition that the subject has

sufficiently long hair . In this case , the equation translates to a form of

conditional probability .

* P

( B ) -

Here , the case B stands for the general probaility of being a female with a

priori probability of the belief . In the given case , the probability is

around 50 percent which could be also translated to a likelihood of occurrence

of around 0.5 likelihood

*

P(E) -

This is the case of calculating the general probability of having long hair .

As per general belief , in a conditional probability equation this term should

be also treated as a case of priori probability which means the value for its

probability estimate is available well in advance and therefore , the value is pivotal

for formulation of the posterior probability

If one would be able to solve

the previous problem using the Bayes Formula , then all the constituent values

would be put in the given equation which would fill in the given values of the

equation .

The same type of analogy is also

required for estimation of a certain disease among a certain set of population

where one would very likely take to calculate the presence of any particular

disease within a given population . For this one needs to undergo a certain

type of test which would result in producing a viable or a positive result .

Generally , it is perceived that

most of the medical tests are not completely accurate and the laboratory would

tell for the presence of a certain malignancy within a test which would convey

a condensed result about the condition of within a test which would convey a

condensed result about the condition of illness of the concerned case .

For the case , when one would

like to see the number of people showing a positive response from a test is as

follows :

1)

Case -1 :

Who is ill and who gets the correct answer from the test .

This is normally used for the case

of estimation of true positives which amounts to 99 percent of the 1 percent of the population

who get the illness

2)

Case-2 :

Who is not ill and who gets the wrong diagnosis result from the test . This

group consists of 1 percent of the 99 percent of the population who would get a

positive response , even though the illness hasn't been completely discovered

or ascertained in the given cases . Again , this is a multiplication of 99

percent and 1 percent ; this group would correspond to the discovery of false

positive cases among the given sample . In simple words , this category of grouping

takes into its ambit , those patients who are actually not ill (may be fit and

fine ) , but due to some aberrations or mistakes in the report which might be

under the case of mis-diagnosis of a patient that , the patient is discovered

as a ill person . Under such

circumstances, untoward cases of administration of wrong medicines might happen

, which rather than curing the person of the given illness might inflict aggravations

over the person rendering him more vulnerable to hazards , catastrophies and

probably untimely death

* So going through the given cases of estimation of correct cases of Classification for a certain disease or illness could help in proper medicine administration which could help in recovery of the patient owing to right Classification of the case ; and if not then the patient would be wrongly classified in a wrong category and probably wrong medicines could get administered to the patient seeking medical assistance for his illness .

( I hope , there is some

understanding clarity in the cases where the role of Bayesian Probability

estimations could be put to use . As mentioned , the usage of this algorithm

takes place in a wide-manner for the case of proper treatment and

classification of illnesses and patients ; classification of fraudulent cases

or credit card / debt card utilisation , productivity of employees at a given

organisation by the management after evaluation of certain metrices :P ...... I

shall try to extend the use case and applications of this theorem in later

blogs and articles )

Tuesday, April 20, 2021

Exploring the World of Probability Theory in ML .. derived article with own interpretations

Exploring the World of Probability Theory in ML

* What is Probability and how

can it be used? Probability is the likelihood of an event which means that

Probability can help someone to determine the possibility of something to

happen or not using the mathematical (Gannita

Gyaana) where one can establish the possibility or likelihood of occurrence

of an event in terms with the total number of possible events that could likely

occur .

* The probability of an event is

measured in the range from 0 (no probability that an event occurs) to the value

of 1 ( a certainty that an event occurs ) which in relative terms says about

the extent of any value towards the any of the extremes from the left most to

the right most values .

* The probability of picking a

certain suit from a deck of Cards (generally referred to as "Taash" in many Asian countries)

is one of the most classic example on explanation of probabilities.

* The deck of cards contains 52

cards (joker cards excluded) which can be divided into four suits as clubs and

spades which are black , and diamonds and hearts which are red in colour .

* Therefore , if one wants to

determine whether the probability of picking the card is an ace , then one must

consider that there are four aces of different suits .The probability of such

an event can be calculated as p = 4/52 which is again evaluated to 0.077.

* Probabilities are between the

values of 0 and 1 ; no probability can exceed such boundaries as everything's

possibility of occurrence lies between nothing to everything and probability of

not occurrence of something is always zero and the probability of occurrence of

everything is always equal to 1 .

* If someone tries to do a

Probability Possibility prediction for a given case of fraud detection in which

one would like to see and find out the number of times a bank transaction

related fraud has occurred over a given set of bank accounts or how many times

fraud happens while conducting a banking transaction or how many times people

get a certain disease in a particular country . So , after associating all the

events , one can estimate the probability of occurrence of associating all the

events , one can estimate the probability of occurrence of such forthcoming

event with regards to the frequency of occurrence , mode of occurrence , time

of occurrence , as well as the likely accounts which could be affected by the

fraud and the conditions which are likely to affect the accounts .

The calculation for the estimation

would take into consideration of counting the number of times a particular

event occured and dividing the total number of events that could possibly occur

for a set of operations and calculations.

* One can count the number of

times the fraud happens using recorded data ( which are mostly taken from

databases ) and then one would divide that figure by the total number of

generic events or observations available

* Therefore , one should divide

the total number of frauds by the number of transactions within a year or one

can count the total number of people who fell ill during the year with respect

to the population of a certain area . The result of this is a number ranging

from 0 to 1 which one can use as baseline probability for a certain event under

certain type of circumstances

* Counting all the occurrences

of an event is not always possible for which one needs to know about the

concept of sampling. Sampling is an act which is based on certain probability

of expectations , which one can observe as a small part of a larger set of

events or objects , yet one may not be able to infer correct probabilities for

an event , as well as exact measures such as quantitative measurements or

qualitative classes related to a set of objects

* Example - If one wants to

track the sales of cars in a certain country , then one doesn't need to track

all the sales that occur in that particular geography ... rather using a sample

comprising of all the sales from new car sellers around the country , one can

determine the quantitative measures such as average price of a car sold or

qualitative measures such as the car model which were sold most often

Some Operating cases on Probabilities

Some Operating cases on Probabilities

* One must rely on some set or rules in order for the operation

to make sense to the user who is conducting the experiment on probabilities.

For example , if someone is conducting an experiment of tossing a coin then

he/she must strictly define the rules according to which the game of tossing a

coin would be played out . The instructor would declare which outcomes should

be taken as valid outcomes and which should not be taken in as valid outcomes ,

rather must be negated the moment the norms of the game are violated .

* Again another property of Probabilities that one needs to be aware is summations between probabilities which states that summations of probabilities is possible only when all the constituting events of the sample space are mutually exclusive to each other . For example lets consider an experiment of rolling a dice over a game of ludo , in this all the possible events that could turn up as a result of throw of the dice are 1 , 2 , 3 , 4 , 5 , 6 . The probability of occurrence of each of the events is 1/6 or 1 by 6 . And here , each of the events within the given sample space are disjoint and mutually exclusive to each other which makes the individual events probability of occurrence as equal to each of the given event divided by the total number of events over the entire sample space . And in case one would like to know the probability of occurrence of all the events together in unison , then one may have to add up the probabilities of each of the individual events as a summation of each of the individual events .. which would yield an output of 1 . So in retrospect, all individual elements of an experiment of probability are disjoint and mutually exclusive and in unison lead to a summed up value of 1 .

* We can take another simple example to demonstrate to demonstrate the case of understanding of probability calculation ; in this case one can consider for example the case of picking a spade or a diamond from a set of cards can be calculated in the following manner . Total number of cards in the entire deck = 52 . Number of cards in the house of clubs = 13 , number of cards in the house of clubs = 13 , number of cards in the house of hearts = 13 , number of cards in the house of diamonds = 13 . If a person takes out a card from the house of diamond then the probability if picking up one of the cards is 13/52 ; the same goes for the case of picking up a random card from a house of clubs is 13/52 . So , total probability of finding a card from both the houses is 26/52 which is equals to 0.5

* One can take the help of subtraction operation to determine the probability of some events where probability of an event is different from the probability of an event that one would want to compare . For instance , if someone wants to determine the probability of drawing a card that does not belong to some house of card for example , say I want to draw a card which is not a diamond from the overall deck of cards , then one will approach the problem in the given manner . He will first find out the overall probability of finding any card and then he will subtract the chance of occurrence of a particular card from the total , 1 - 0.25 which happens to be as 0.75. One could get a complement of the occurrence of the card in this manner , which could be used for finding the probability of not occurrence of a particular event .

* Multiplication of a set of events can be helpful for finding the intersection of a set of independent events . Independent Events are those which do not influence each other . For instance , if one is playing a game of dice and one would like to throw two dices together , then the probability of getting two sixes is 1/ 36 . This can be obtained by multiplication of dices over both the cards , where first the probability of obtaining a 6 is found out to be as 1/6 and then the subsequent independent event would also produce an probability of obtaining another 6 is found out to be as 1/6 , here both the values are multiplied with each other and found that product of both the probabilities of independent events would yield a value output as 1/36 or 0.28 .

* Using the concepts of summation , difference and multiplication , one can obtain the probability of most of the calculations which deal with events . For instance , if one would want to compare the probability of getting atleast a six from two throws of dice which is a summation of mutually exclusive events . Probability of obtaining two sixes of dice , p = 1/6* 1/6 = 1/36

* In a similar manner if one would like to calculate the probability

of having a six on the first dice and then something other than a six on the

second throw of the dice is p = (1/6)*(1- 1/6) = 5/36 ,

* Probability of getting a six from two thrown dice is p = 1/6*

1/6 +2*1/6*(1- 1/6) = 11/36

Monday, April 19, 2021

Using Vectorisation Effectively in Python and R – a revision example

========================================================

Using Vectorisation Effectively in Python

and R – instance example

========================================================

·

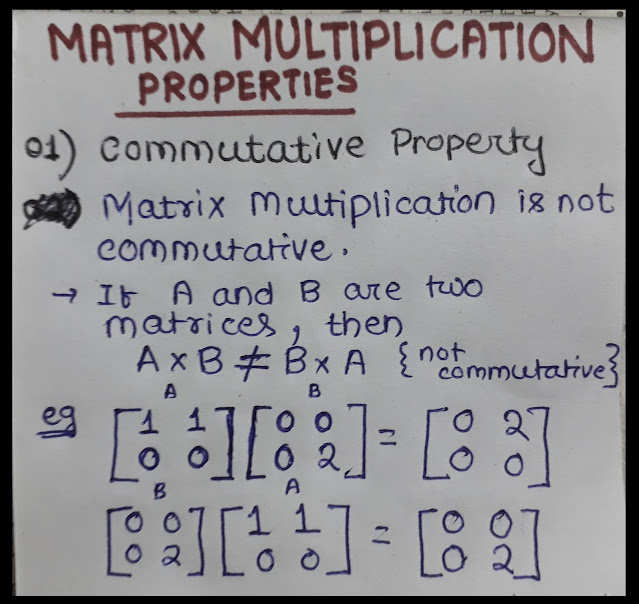

While performing Matrix Operations, such as Vector Multiplication

, its very hard to consider that the computer does all the forms of hard work

. What does one need to do while working

on numbers in a Vectorised form?

=========================================

Using

a simple Vector for creation of a Numpy List

=========================================

import numpy as np

y = np.array([43, 45,47,49,51])

print(y)

print(y.shape)

Output

[43,45,47,49,51]

(5,)

==================================

==================================

* The method "shape"

can promptly inform someone about the shape of a matrix . In the above given

example , one can see that the shape of the matrix is just a one-dimensional

entity which reports only three rows and no columns which means that the object

is a vector

==================================

==================================

import numpy as np

X =

np.array([1.1,1,545,1],[4.6,0,345,2],[7.2,1,754,3])

print(X)

==================================

==================================

* One can do the following

operation - sum , subtract , multiply or divide using the severall standard

operators applicable over Python language in the given manner . Lets take two

data-array objects .. a and b

a = np.array([[1,1],[1,0]])

b = np.array([[1,0],[0,1]])

print(a - b)

[[ 0 1] [1 -1]]

==================================

a = np.array([[0,1],[1,-1]])

print(a * -2)

[[ 0 -2 ]

[ 2 -2 ]]

==================================

X = np.array([[4,5],[2,4],[3,3]])

b = np.array([3,-2])

print(np.dot(X,b))

[ 2 -2 3 ]

B = np.array([3,-2],[-2,5])

print(np.dot(X,B))

* One can define the dimensions of one's vector using the "length()" function . But one can use the dis() function instead for the matrices , because applying the length() function to a matrix shows the output to carry only some number of elements in it .

Tuesday, March 30, 2021

Working with Data Objects in R

Working with Data Objects in R

* Data Objects are the fundamental items that one can work with

in R language

* Carrying out analysis on one's given data and making sense of the results are the most important reasons of using R . In this article methods of working over a given data set would be provided and along with that understanding the results associated with the data objects of R is the central idea for working over R

* One can learn to use the different forms of data that are associated with R and how to convert the data from one form to another form

* Over R , one would also learn the techniques of sorting and rearranging the data

* Some of the processes involved with Data Objects manipulation in R are in following manner :

==========================

Manipulating Data Objects in R

==========================

- When you collect the data the very forst step is to get the

data over to the R console

-

Write a program in Python to calculate the factorial value of a number When we talk about factorial of a number , then the factorial of tha...