This technical blog is my own collection of notes , articles , implementations and interpretation of referred topics in coding, programming, data analytics , data science , data warehousing , Cloud Applications and Artificial Intelligence . Feel free to explore my blog and articles for reference and downloads . Do subscribe , like , share and comment ---- Vivek Dash

Thursday, August 5, 2021

One Hot Encoding and Dummy Variables Generation upon a dataframe | Scenario - Perform One-Hot Encoding upon Un-Ordered Data in a sample dataframe and generate One-hot encoded feature variables | Conceptual Infographic Note

Sunday, July 18, 2021

An Introduction to Classification Algorithms with 10 fundamental questions | An Infographic Note with questions and answers

Wednesday, July 14, 2021

Infographic Note on Z-Score in Statistics and Machine Learning with it's usage scenario , calculation , implication and applications

Sunday, June 27, 2021

Sunday, June 6, 2021

Infographic Short Note on P-value in Statistics and its applications in Machine Learning

Saturday, June 5, 2021

Friday, May 28, 2021

Infographic Revision Short Note on Two - Way ANOVA's in Statistics , Analytics and Machine Learning

Infographic Revision Short Note on Data Collection using Interviews in Statistics , Analytics and Machine Learning

Infographic Revision Short Note on Statistical Data Collection using Interviews in Statistics , Analytics and Machine Learning - part 2

Thursday, May 6, 2021

Tuesday, May 4, 2021

Monday, May 3, 2021

Friday, April 30, 2021

Validation of Machine Learning Algorithms and Scenarios - A short article

Validation of Machine Learning Codes

* For example ... if one wants a computer to distinguish a photo of a dog from a photo of a cat , one can do it with good examples of dogs and cats . One can then train a dog versus Cat classifier which is based on some machine learning algorithms that would output the probability that a given photo is that of a dog or a cat . All of the times for a set of photos resembling a given photo , the output would be in the form of a validation quantity which would be expressing some level of accuracy for a number which would reflect how well the classifier algorithm was able to perform those computations and with what level of alacrity and accuracy . I am using the alacrity which should convey to the reader about the performnace and speed aspect of the identification process of the Machine Learning algorithm when computed upon a batch of photos for finding resemblance over a batch of photos of classes of photos by doing all forms of stucturisation like segmentation and clustering , KNN etc . And when it comes to the factor of accuracy one can think of the degree and magnitude in terms of percentage of resemblance of the referenced sample to the sample over which the matching is to be calculated .

*

Based on the probability which is exressed in

percentage accuracy , one can then decide whether the class ( that is if a

dog or a cat) is based on the estimated

probability as calculated by the algorithm .

* Whenever the

obtained probability or percentage

would be higher for a dog , one

can minimize the risk of making a supposed wrong assessment by choosing

the higher chances which would be favouring the probability of finding a dog .

* The greater the

probability difference between the

likelihood of a dog against that of a cat , the higher

would be the confidence that one can have in their choices of finding any appropriate result

* And in case , the probability difference between the likelihood of a dog against that of a cat , here it can be assumed that the picture of the subject is not clear or probably the subjects in the picture bear much resemblance in features which would indirectly mean that some of the pictures of the cats are similar to that of the dogs and because of which a confusion may arise and lead to another supposition that whether the dogs are cattish in the concerned pictures .

* On

the point of training a classifier :

When you pose a problem and offer the examples , with each of the examples being carefully marked with the label or class that the algorithm should learn ; then the computer trains the algorithm for a while and then finally one would get a resulting model out of the training process of the model over the dataset .

* Here , your computer

trains the algorithm for a while and

finally one would get a resulting model for the answer which provides

one with an answer or probability .

* Labellling is an another associated activity that can be carried out but in the end a probability is just an opportunity to propose

a solution and get an answer

* At such a point , one

may have addressed all the

issues and perhaps might guess that the work is finished , but still one may validate

the results for ensuring that the

results generated are first

comprehensible to the human , make

sure that the user is able to have a clear understanding of the involved background processes and break-up analysis of the code

and the result which can enable

other readers to understand the code along with numbers

* More over

this would be elaborated in the forthcoming sessions / articles where we will look into the various modes in which the

machine learning results could be validated and made comprehensible to the users

Last

modified: 16:39

Wednesday, April 28, 2021

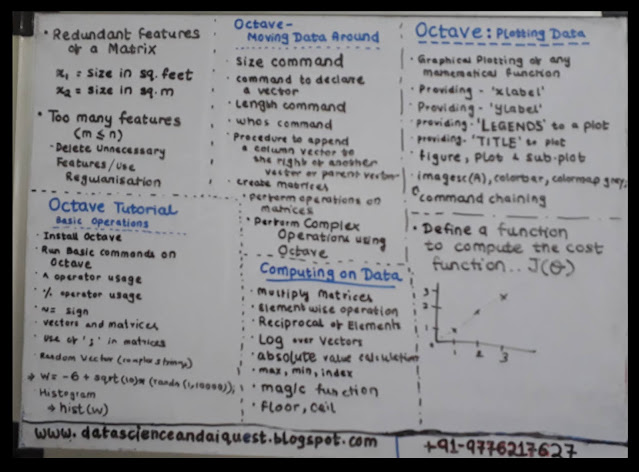

Exploring Cost Functions in ML

* The driving force behind

the concept of optimization in

machine learning is the response from a function which is internal to the algorithm which is called as a Cost Function

* In addition

, a cost function determines how

well a machine learning algorithm performs in a supervised prediction or an unsupervisd optimisation problem

* The Evaluation function works by comparing the algorithm

predictions against the actual outcome recorded from the real world

.

*

Comparing a

prediction against a real value using

a cost function which determines the algorithm's error level

*

Since it is a mathematical formulation , a general cost function expresses the error level in a numerical form thereby keeping

the errors low . This means that the cost function modulates according to the parameters of a function in order to tune the produced output to a

more numeric form thereby keeping the errors of

the overall output to a low .

* The cost function

transmits whatever is actually

important and meaningful for the

purposes of the learning algorithm

* As a result , when considering a scenario like stock market forecasting , the cost function expresses the importance of avoiding incorrect predictions . In such a case

, one may want to make some

money by avoiding any sort of big losses . In forecasting sales , the concern

is different because one needs

to reduce the error in common and

frequent situations and not in the

rare and exceptional cases , as one uses

a different cost function .

*

Example -- While considering stock market forecasting , the cost function expresses the importance of avoiding incorrect predictions . In such a case

, one may want to make some

money by avoiding big losses

* When the problem is to predict

who would likely become ill from a certain disease , then for this also

algorithms are in place that can score a higher probability of singling out the people who have the same characteristics and actually

did become ill at a later point of

time . And based on the severity

of the illness , one may also

prefer that the algorithm wrongly chooses some

people who do not get ill , rather misses out on the people who

actually get ill

* So after going through the given aspects on the usability of cost functions and how they are coagulated with some ML algorithms in order to fine tune the result .we will get to see and check the method of Optimisation of a Cost Function and how and why they are done

* Optimisation

of Cost Functions :

It is widely accepted as a conjecture that the cost function associated with a Machine Learning generic function is what truly drives the success of a machine learning application . This is an important part of the process of representation of an associated cost function that is the capabilty of an algorithm to approximate certain mathematical functions and along with that do some necessary optimisation which means how the machine learning algorithm sets their internal parameters .

* Most of the machine learning algorithms have their own optimisation which is associated with their own cost functions which means some of the better developed and advanced algorithms of the time are well capable enough to fine tune their algorithms on their own and can come at a best optimised result at each step of the formulation of machine learning algorithms . This leaves the role of the user as futile some of the times as the role of the user to fine tune the learning process and preside over the aspects of learning are not so relevant .

* Along with such , there are some algorithms that allow you to choose among a certain number of possible

functions which provide more flexibility to choose their own

course path of learning

* When an algorithm uses a cost function directly in the optimisation process , the cost function is used internally . As the algorithms are set to work with certain cost functions , the objective of the optimisation problem may differ from the desired objective .

* And as the algorithms set to work

with some of the cost functions , the optimisation

objectives may also differ from the

desired objective . In such a

circumstance where the associated cost function

could be used , one can call an error function or a loss

function .. an error function

is where the value needs to be

minimised ; and the reverse of it is called a scoring function if the objective for the function is to maximise the result .

* With respect

to one's

target , a standard practice is to define

the cost function that works best in

solving the problem and then to figure out

which algorithm would work best in the optimisation of the algorithm in order to define the hypothesis space that one would like to test

. When someone works with algorithms that do

not allow the cost function

that one wants , one can still indirectly influence their optimisation process by fixing their hyper-parameters

and selecting your input features with respect to the cost

function . Finally , when someone has gathered all the algorithm results

, then one may evaluate them by

using the chosen cost function and then decide over the final hypothesis with the best result from the

chosen error function .

* Whenever an algorithm learns from a dataset ( combination of multiple data arranged in attribute order ) , the cost function associated with that particular algorithm guides the optimisation process by pointing out the changes in the internal parameters that are the most beneficial for making better predictions . This process of optimisation continues as the cost function response improves iteration by iteration with a process of improvised learning which of course is a result of iterative learning of the algorithm . When the response stalls or worsens , it is time to stop tweaking the algorithm's parameters because the algorithm is not likely to achieve better prediction results from there on . And when the algorithm works on new data and makes predictions , the cost function helps to evaluate whether the algorithm is working correctly

In Conclusion .. Even if the decision on undertaking any particular cost function is an underrated activity in machine learning , it is still considered as a fundamental task because this determines how the algorithm behaves after learning and how the algorithm would handle the problem that one would like to take up and solve . It is suggested that one should not go with the default options of cost functions but rather should ask oneself what should be the fundamental objective of using such a cost function which would yield their appropriate result

Last

modified: 00:26

-

Write a program in Python to calculate the factorial value of a number When we talk about factorial of a number , then the factorial of tha...